import pandas as pd

import numpy as np

from sklearn.model_selection import KFold

import math

import pdb

Load Data¶

base_path = "/Users/loreliegordon/Library/Mobile Documents/com~apple~CloudDocs/Documents/root/Columbia/Fall2021/ELEN4720/Assignments/assignment2/"

X_all = pd.read_csv(base_path + "hw2-data/Bayes_classifier/X.csv", header=None)

one_col = np.ones(len(X_all)).reshape(len(X_all), 1)

X_all = np.concatenate([X_all, one_col], axis=1)

y_all = pd.read_csv(base_path + "hw2-data/Bayes_classifier/y.csv", header=None)

y_all = y_all.copy().to_numpy()

y_train = y_all

X_train = X_all

# y_train[y_train == 0] = -1

What the Logistic Regression Classifier does¶

Training¶

Predicting¶

def sigmoid(x):

"""Sigmoid function implementation"""

return float(1) / (1 + np.exp(-1*x))

def logistic_regression_loss_vectorized(W, X, y, reg):

"""

Logistic regression loss function, vectorized version.

Use this linear classification method to find optimal decision boundary.

Inputs and outputs are the same as softmax_loss_naive.

"""

loss = 0

# Initialize the gradient to zero

dW = np.zeros_like(W)

n = len(y)

f = X @ W

y = y.reshape(1, n)

sig_f = sigmoid(f)

loss_a = (y @ np.log(sig_f))/n

loss_b = ((1-y) @ np.log(1-sig_f))/n

loss_c = reg*(W**2).mean()

loss = loss_a + loss_b + loss_c

y = y.reshape((n, 1))

dW = X.T @ (y-sig_f)

dW = dW.reshape(W.shape)

dW -= (2*reg*(W))

return -1*loss[0], dW

def newtons_method_loss(W, W_old, X, y, reg):

dW = np.zeros_like(W)

n = len(y)

y = y.reshape((n, 1))

f = X @ W

y = y.reshape(1, n)

sig_f = sigmoid(f)

loss_a = (y @ np.log(sig_f))/n

loss_b = ((1-y) @ np.log(1-sig_f))/n

loss_c = reg*(W**2).mean()

loss = -1*(loss_a + loss_b + loss_c)

y = y.reshape((n, 1))

dW = X.T @ (y-sig_f)

dW = dW.reshape(W.shape)

D = (sig_f * (1 - sig_f))

D = D.reshape(len(D))

D = np.diag(D)

H = X.T @ D @ X

weight_change = (W - W_old)

grad_term = weight_change.T @ dW

sec_grad_term = 0.5*weight_change.T @ H @ weight_change

loss_prime = loss + grad_term + sec_grad_term

try:

dW_update = np.linalg.inv(H) @ dW

return loss_prime, dW_update.reshape(W.shape)

except:

print("couldn't calculate hessian")

return loss, dW

class BasicClassifier():

"""Basic classifier

- Training is done batched gradient descent

- f = X * W

-

"""

def __init__(self) -> None:

self.weights = None

def initialize_weights(self, dim):

self.weights = 0.001 * np.random.randn(dim, 1)

self.old_weights = 0.001 * np.random.randn(dim, 1)

def train(self, X_train, y_train, batch_size=100, learning_rate=0.00002, training_iterations=1000, reg=1e-5):

"""This function will implement batched gradient descent

"""

num_train = len(X_train)

num_predictors = len(X_train[0])

if self.weights is None:

self.initialize_weights(num_predictors)

all_loss = []

for i in range(training_iterations):

if batch_size:

random_iis = np.random.choice(range(num_train), size=batch_size)

X_batch = X_train[random_iis]

y_batch = y_train[random_iis]

else:

X_batch = X_train

y_batch = y_train

loss, dW = self.loss(X_batch, y_batch, regularization=reg)

all_loss.append(loss)

self.old_weights = self.weights

self.weights += learning_rate * dW

if i % 10 == 0:

print('iteration %d / %d: loss %f' % (i, training_iterations, loss))

return all_loss

def loss(self):

raise NotImplementedError("Inherit this class and overwrite this function.")

def fit(self, X_train, y_train):

"""Fit the input data

After this function the model parameters will be fit. We need to have:

-

Args:

X_train (np.array): Training features

y_train (np.array): Single column for the binary predicted class either 0 or 1

"""

return self.train(X_train, y_train)

def predict(self, X):

"""Predict new data

To predict we need to:

- Calculate the probability of being either class

- Choose the class with the higher probability

"""

probs = X @ self.weights

return probs > 0

class LogisticRegression(BasicClassifier):

"""LogisticRegression

This is an implementation from scratch that has the following:

- Input data x is assumed to follow a poisson distribution with prior gamma(2,1)

- Y follows a bernoulli

"""

def __init__(self, *args, **kwargs) -> None:

self.method = kwargs.pop("method", "grad_desc")

return super().__init__(*args, **kwargs)

def loss(self, X_train, y_train, regularization):

if self.method == "grad_desc":

return logistic_regression_loss_vectorized(self.weights, X_train, y_train, regularization)

elif self.method == "newton":

return newtons_method_loss(self.weights, self.old_weights, X_train, y_train, regularization)

y_test_1_y_pred_1 = []

y_test_0_y_pred_0 = []

y_test_1_y_pred_0 = []

y_test_0_y_pred_1 = []

all_losses = []

kf = KFold(n_splits=10, shuffle=True)

for train_index, test_index in kf.split(X_all):

print("------------------------------------ NEW TEST ------------------------------------")

X_train, X_test = X_all[train_index], X_all[test_index]

y_train, y_test = y_all[train_index], y_all[test_index]

cl = LogisticRegression()

loss_data = cl.train(X_train, y_train, learning_rate=0.01/4600, batch_size=4600, training_iterations=1000)

y_pred = cl.predict(X_test)

all_losses.append(loss_data)

joined = pd.concat([pd.DataFrame(y_pred, columns=["y_pred"]), pd.DataFrame(y_test, columns=["y_test"])], axis=1)

joined["correct"] = joined["y_pred"] == joined["y_test"]

y_test_1_y_pred_1.append(joined.loc[(joined["correct"] == True) & (joined["y_test"] == 1),"correct"].count()) # /len(y_test)

y_test_0_y_pred_0.append(joined.loc[(joined["correct"] == True) & (joined["y_test"] == 0),"correct"].count()) # /len(y_test)

y_test_1_y_pred_0.append(joined.loc[(joined["correct"] == False) & (joined["y_test"] == 1),"correct"].count()) # /len(y_test)

y_test_0_y_pred_1.append(joined.loc[(joined["correct"] == False) & (joined["y_test"] == 0),"correct"].count()) # /len(y_test)

y_test_0_y_pred_0_avg = sum(y_test_0_y_pred_0)#/len(y_test_0_y_pred_0)

y_test_0_y_pred_1_avg = sum(y_test_0_y_pred_1)#/len(y_test_0_y_pred_1)

y_test_1_y_pred_0_avg = sum(y_test_1_y_pred_0)#/len(y_test_1_y_pred_0)

y_test_1_y_pred_1_avg = sum(y_test_1_y_pred_1)#/len(y_test_1_y_pred_1)

[[y_test_0_y_pred_0_avg, y_test_0_y_pred_1_avg], [y_test_1_y_pred_0_avg, y_test_1_y_pred_1_avg]]

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.691085

iteration 10 / 1000: loss 0.357693

iteration 20 / 1000: loss 0.323370

iteration 30 / 1000: loss 0.294840

iteration 40 / 1000: loss 0.279016

iteration 50 / 1000: loss 0.266190

iteration 60 / 1000: loss 0.285706

iteration 70 / 1000: loss 0.275192

iteration 80 / 1000: loss 0.263988

iteration 90 / 1000: loss 0.253135

iteration 100 / 1000: loss 0.254973

iteration 110 / 1000: loss 0.255444

iteration 120 / 1000: loss 0.243830

iteration 130 / 1000: loss 0.253237

iteration 140 / 1000: loss 0.248854

iteration 150 / 1000: loss 0.248638

iteration 160 / 1000: loss 0.252007

iteration 170 / 1000: loss 0.238235

iteration 180 / 1000: loss 0.237929

iteration 190 / 1000: loss 0.239187

iteration 200 / 1000: loss 0.246461

iteration 210 / 1000: loss 0.245207

iteration 220 / 1000: loss 0.250331

iteration 230 / 1000: loss 0.230779

iteration 240 / 1000: loss 0.239603

iteration 250 / 1000: loss 0.222734

iteration 260 / 1000: loss 0.227986

iteration 270 / 1000: loss 0.240278

iteration 280 / 1000: loss 0.226218

iteration 290 / 1000: loss 0.233308

iteration 300 / 1000: loss 0.216332

iteration 310 / 1000: loss 0.234538

iteration 320 / 1000: loss 0.238447

iteration 330 / 1000: loss 0.223256

iteration 340 / 1000: loss 0.246746

iteration 350 / 1000: loss 0.222477

iteration 360 / 1000: loss 0.225580

iteration 370 / 1000: loss 0.237641

iteration 380 / 1000: loss 0.226645

iteration 390 / 1000: loss 0.216896

iteration 400 / 1000: loss 0.235533

iteration 410 / 1000: loss 0.226746

iteration 420 / 1000: loss 0.222692

iteration 430 / 1000: loss 0.220780

iteration 440 / 1000: loss 0.212416

iteration 450 / 1000: loss 0.221852

iteration 460 / 1000: loss 0.219290

iteration 470 / 1000: loss 0.226187

iteration 480 / 1000: loss 0.223016

iteration 490 / 1000: loss 0.223798

iteration 500 / 1000: loss 0.229763

iteration 510 / 1000: loss 0.228745

iteration 520 / 1000: loss 0.220119

iteration 530 / 1000: loss 0.220679

iteration 540 / 1000: loss 0.227342

iteration 550 / 1000: loss 0.231878

iteration 560 / 1000: loss 0.219044

iteration 570 / 1000: loss 0.223384

iteration 580 / 1000: loss 0.217516

iteration 590 / 1000: loss 0.219137

iteration 600 / 1000: loss 0.217904

iteration 610 / 1000: loss 0.223094

iteration 620 / 1000: loss 0.222566

iteration 630 / 1000: loss 0.221634

iteration 640 / 1000: loss 0.222223

iteration 650 / 1000: loss 0.213888

iteration 660 / 1000: loss 0.211998

iteration 670 / 1000: loss 0.226604

iteration 680 / 1000: loss 0.219435

iteration 690 / 1000: loss 0.222706

iteration 700 / 1000: loss 0.225436

iteration 710 / 1000: loss 0.226975

iteration 720 / 1000: loss 0.239506

iteration 730 / 1000: loss 0.212639

iteration 740 / 1000: loss 0.222727

iteration 750 / 1000: loss 0.228832

iteration 760 / 1000: loss 0.236641

iteration 770 / 1000: loss 0.220939

iteration 780 / 1000: loss 0.212482

iteration 790 / 1000: loss 0.220245

iteration 800 / 1000: loss 0.223192

iteration 810 / 1000: loss 0.219797

iteration 820 / 1000: loss 0.213775

iteration 830 / 1000: loss 0.243996

iteration 840 / 1000: loss 0.230919

iteration 850 / 1000: loss 0.228846

iteration 860 / 1000: loss 0.208959

iteration 870 / 1000: loss 0.230814

iteration 880 / 1000: loss 0.220524

iteration 890 / 1000: loss 0.228011

iteration 900 / 1000: loss 0.230403

iteration 910 / 1000: loss 0.219807

iteration 920 / 1000: loss 0.212930

iteration 930 / 1000: loss 0.227049

iteration 940 / 1000: loss 0.203386

iteration 950 / 1000: loss 0.216268

iteration 960 / 1000: loss 0.215008

iteration 970 / 1000: loss 0.223700

iteration 980 / 1000: loss 0.228997

iteration 990 / 1000: loss 0.208079

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.694400

iteration 10 / 1000: loss 0.372492

iteration 20 / 1000: loss 0.319682

iteration 30 / 1000: loss 0.301276

iteration 40 / 1000: loss 0.294716

iteration 50 / 1000: loss 0.283661

iteration 60 / 1000: loss 0.272061

iteration 70 / 1000: loss 0.268313

iteration 80 / 1000: loss 0.269299

iteration 90 / 1000: loss 0.267328

iteration 100 / 1000: loss 0.266036

iteration 110 / 1000: loss 0.261188

iteration 120 / 1000: loss 0.250763

iteration 130 / 1000: loss 0.265923

iteration 140 / 1000: loss 0.253634

iteration 150 / 1000: loss 0.245941

iteration 160 / 1000: loss 0.245508

iteration 170 / 1000: loss 0.234328

iteration 180 / 1000: loss 0.230063

iteration 190 / 1000: loss 0.230185

iteration 200 / 1000: loss 0.244117

iteration 210 / 1000: loss 0.242754

iteration 220 / 1000: loss 0.250163

iteration 230 / 1000: loss 0.230950

iteration 240 / 1000: loss 0.249192

iteration 250 / 1000: loss 0.236453

iteration 260 / 1000: loss 0.233589

iteration 270 / 1000: loss 0.239098

iteration 280 / 1000: loss 0.236487

iteration 290 / 1000: loss 0.233646

iteration 300 / 1000: loss 0.235545

iteration 310 / 1000: loss 0.230797

iteration 320 / 1000: loss 0.242762

iteration 330 / 1000: loss 0.232512

iteration 340 / 1000: loss 0.248315

iteration 350 / 1000: loss 0.242766

iteration 360 / 1000: loss 0.245148

iteration 370 / 1000: loss 0.230836

iteration 380 / 1000: loss 0.226699

iteration 390 / 1000: loss 0.251263

iteration 400 / 1000: loss 0.231929

iteration 410 / 1000: loss 0.243981

iteration 420 / 1000: loss 0.229928

iteration 430 / 1000: loss 0.225779

iteration 440 / 1000: loss 0.227971

iteration 450 / 1000: loss 0.229669

iteration 460 / 1000: loss 0.236438

iteration 470 / 1000: loss 0.231128

iteration 480 / 1000: loss 0.231995

iteration 490 / 1000: loss 0.228665

iteration 500 / 1000: loss 0.228680

iteration 510 / 1000: loss 0.218954

iteration 520 / 1000: loss 0.226357

iteration 530 / 1000: loss 0.227871

iteration 540 / 1000: loss 0.230305

iteration 550 / 1000: loss 0.228127

iteration 560 / 1000: loss 0.232739

iteration 570 / 1000: loss 0.230652

iteration 580 / 1000: loss 0.214388

iteration 590 / 1000: loss 0.234335

iteration 600 / 1000: loss 0.230386

iteration 610 / 1000: loss 0.216675

iteration 620 / 1000: loss 0.231893

iteration 630 / 1000: loss 0.228668

iteration 640 / 1000: loss 0.233972

iteration 650 / 1000: loss 0.225766

iteration 660 / 1000: loss 0.223170

iteration 670 / 1000: loss 0.211295

iteration 680 / 1000: loss 0.241156

iteration 690 / 1000: loss 0.211135

iteration 700 / 1000: loss 0.243140

iteration 710 / 1000: loss 0.229229

iteration 720 / 1000: loss 0.217964

iteration 730 / 1000: loss 0.222277

iteration 740 / 1000: loss 0.230158

iteration 750 / 1000: loss 0.235117

iteration 760 / 1000: loss 0.219266

iteration 770 / 1000: loss 0.217187

iteration 780 / 1000: loss 0.221517

iteration 790 / 1000: loss 0.238290

iteration 800 / 1000: loss 0.225401

iteration 810 / 1000: loss 0.240281

iteration 820 / 1000: loss 0.228587

iteration 830 / 1000: loss 0.223454

iteration 840 / 1000: loss 0.216463

iteration 850 / 1000: loss 0.219221

iteration 860 / 1000: loss 0.233202

iteration 870 / 1000: loss 0.225574

iteration 880 / 1000: loss 0.214920

iteration 890 / 1000: loss 0.203896

iteration 900 / 1000: loss 0.212708

iteration 910 / 1000: loss 0.219118

iteration 920 / 1000: loss 0.202962

iteration 930 / 1000: loss 0.231896

iteration 940 / 1000: loss 0.214641

iteration 950 / 1000: loss 0.221556

iteration 960 / 1000: loss 0.226753

iteration 970 / 1000: loss 0.209505

iteration 980 / 1000: loss 0.225170

iteration 990 / 1000: loss 0.217815

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.693763

iteration 10 / 1000: loss 0.366887

iteration 20 / 1000: loss 0.321666

iteration 30 / 1000: loss 0.305422

iteration 40 / 1000: loss 0.288466

iteration 50 / 1000: loss 0.267797

iteration 60 / 1000: loss 0.279133

iteration 70 / 1000: loss 0.265880

iteration 80 / 1000: loss 0.257001

iteration 90 / 1000: loss 0.268522

iteration 100 / 1000: loss 0.262486

iteration 110 / 1000: loss 0.264208

iteration 120 / 1000: loss 0.256291

iteration 130 / 1000: loss 0.262243

iteration 140 / 1000: loss 0.255959

iteration 150 / 1000: loss 0.247186

iteration 160 / 1000: loss 0.253604

iteration 170 / 1000: loss 0.246512

iteration 180 / 1000: loss 0.241064

iteration 190 / 1000: loss 0.244522

iteration 200 / 1000: loss 0.235111

iteration 210 / 1000: loss 0.244929

iteration 220 / 1000: loss 0.237956

iteration 230 / 1000: loss 0.242173

iteration 240 / 1000: loss 0.258290

iteration 250 / 1000: loss 0.251716

iteration 260 / 1000: loss 0.255959

iteration 270 / 1000: loss 0.240398

iteration 280 / 1000: loss 0.237468

iteration 290 / 1000: loss 0.229578

iteration 300 / 1000: loss 0.226887

iteration 310 / 1000: loss 0.241421

iteration 320 / 1000: loss 0.239123

iteration 330 / 1000: loss 0.225896

iteration 340 / 1000: loss 0.222883

iteration 350 / 1000: loss 0.241183

iteration 360 / 1000: loss 0.227334

iteration 370 / 1000: loss 0.237658

iteration 380 / 1000: loss 0.239213

iteration 390 / 1000: loss 0.220698

iteration 400 / 1000: loss 0.252110

iteration 410 / 1000: loss 0.220904

iteration 420 / 1000: loss 0.230460

iteration 430 / 1000: loss 0.220502

iteration 440 / 1000: loss 0.224511

iteration 450 / 1000: loss 0.231083

iteration 460 / 1000: loss 0.236511

iteration 470 / 1000: loss 0.224803

iteration 480 / 1000: loss 0.225471

iteration 490 / 1000: loss 0.228224

iteration 500 / 1000: loss 0.222720

iteration 510 / 1000: loss 0.225799

iteration 520 / 1000: loss 0.231897

iteration 530 / 1000: loss 0.224402

iteration 540 / 1000: loss 0.239963

iteration 550 / 1000: loss 0.231306

iteration 560 / 1000: loss 0.227451

iteration 570 / 1000: loss 0.213351

iteration 580 / 1000: loss 0.222617

iteration 590 / 1000: loss 0.226543

iteration 600 / 1000: loss 0.217618

iteration 610 / 1000: loss 0.238608

iteration 620 / 1000: loss 0.226436

iteration 630 / 1000: loss 0.212187

iteration 640 / 1000: loss 0.238540

iteration 650 / 1000: loss 0.214194

iteration 660 / 1000: loss 0.235245

iteration 670 / 1000: loss 0.213083

iteration 680 / 1000: loss 0.234947

iteration 690 / 1000: loss 0.243880

iteration 700 / 1000: loss 0.225470

iteration 710 / 1000: loss 0.211373

iteration 720 / 1000: loss 0.229381

iteration 730 / 1000: loss 0.250709

iteration 740 / 1000: loss 0.226172

iteration 750 / 1000: loss 0.222252

iteration 760 / 1000: loss 0.213172

iteration 770 / 1000: loss 0.220756

iteration 780 / 1000: loss 0.221858

iteration 790 / 1000: loss 0.220508

iteration 800 / 1000: loss 0.220740

iteration 810 / 1000: loss 0.224552

iteration 820 / 1000: loss 0.226641

iteration 830 / 1000: loss 0.222827

iteration 840 / 1000: loss 0.218028

iteration 850 / 1000: loss 0.209134

iteration 860 / 1000: loss 0.229362

iteration 870 / 1000: loss 0.230729

iteration 880 / 1000: loss 0.230116

iteration 890 / 1000: loss 0.214454

iteration 900 / 1000: loss 0.220869

iteration 910 / 1000: loss 0.227691

iteration 920 / 1000: loss 0.222155

iteration 930 / 1000: loss 0.220445

iteration 940 / 1000: loss 0.235513

iteration 950 / 1000: loss 0.218192

iteration 960 / 1000: loss 0.226654

iteration 970 / 1000: loss 0.210401

iteration 980 / 1000: loss 0.222981

iteration 990 / 1000: loss 0.229595

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.688756

iteration 10 / 1000: loss 0.364849

iteration 20 / 1000: loss 0.329839

iteration 30 / 1000: loss 0.304431

iteration 40 / 1000: loss 0.287994

iteration 50 / 1000: loss 0.285706

iteration 60 / 1000: loss 0.279181

iteration 70 / 1000: loss 0.284815

iteration 80 / 1000: loss 0.263642

iteration 90 / 1000: loss 0.278371

iteration 100 / 1000: loss 0.269218

iteration 110 / 1000: loss 0.251704

iteration 120 / 1000: loss 0.255134

iteration 130 / 1000: loss 0.251138

iteration 140 / 1000: loss 0.254626

iteration 150 / 1000: loss 0.254990

iteration 160 / 1000: loss 0.260979

iteration 170 / 1000: loss 0.255101

iteration 180 / 1000: loss 0.249310

iteration 190 / 1000: loss 0.252939

iteration 200 / 1000: loss 0.249485

iteration 210 / 1000: loss 0.243689

iteration 220 / 1000: loss 0.255958

iteration 230 / 1000: loss 0.254518

iteration 240 / 1000: loss 0.251950

iteration 250 / 1000: loss 0.247435

iteration 260 / 1000: loss 0.239697

iteration 270 / 1000: loss 0.240425

iteration 280 / 1000: loss 0.249609

iteration 290 / 1000: loss 0.240935

iteration 300 / 1000: loss 0.230463

iteration 310 / 1000: loss 0.231067

iteration 320 / 1000: loss 0.243099

iteration 330 / 1000: loss 0.245830

iteration 340 / 1000: loss 0.244099

iteration 350 / 1000: loss 0.234691

iteration 360 / 1000: loss 0.245076

iteration 370 / 1000: loss 0.232234

iteration 380 / 1000: loss 0.233299

iteration 390 / 1000: loss 0.229938

iteration 400 / 1000: loss 0.236311

iteration 410 / 1000: loss 0.220086

iteration 420 / 1000: loss 0.236271

iteration 430 / 1000: loss 0.235059

iteration 440 / 1000: loss 0.231673

iteration 450 / 1000: loss 0.231980

iteration 460 / 1000: loss 0.239632

iteration 470 / 1000: loss 0.230535

iteration 480 / 1000: loss 0.247934

iteration 490 / 1000: loss 0.232337

iteration 500 / 1000: loss 0.228523

iteration 510 / 1000: loss 0.228575

iteration 520 / 1000: loss 0.223696

iteration 530 / 1000: loss 0.226307

iteration 540 / 1000: loss 0.246694

iteration 550 / 1000: loss 0.223324

iteration 560 / 1000: loss 0.233816

iteration 570 / 1000: loss 0.221520

iteration 580 / 1000: loss 0.230282

iteration 590 / 1000: loss 0.249834

iteration 600 / 1000: loss 0.242335

iteration 610 / 1000: loss 0.227990

iteration 620 / 1000: loss 0.239522

iteration 630 / 1000: loss 0.227653

iteration 640 / 1000: loss 0.231329

iteration 650 / 1000: loss 0.225231

iteration 660 / 1000: loss 0.225629

iteration 670 / 1000: loss 0.243444

iteration 680 / 1000: loss 0.241739

iteration 690 / 1000: loss 0.219661

iteration 700 / 1000: loss 0.226910

iteration 710 / 1000: loss 0.238445

iteration 720 / 1000: loss 0.227833

iteration 730 / 1000: loss 0.224551

iteration 740 / 1000: loss 0.216476

iteration 750 / 1000: loss 0.228348

iteration 760 / 1000: loss 0.242185

iteration 770 / 1000: loss 0.229589

iteration 780 / 1000: loss 0.238089

iteration 790 / 1000: loss 0.227829

iteration 800 / 1000: loss 0.233429

iteration 810 / 1000: loss 0.240798

iteration 820 / 1000: loss 0.234921

iteration 830 / 1000: loss 0.230281

iteration 840 / 1000: loss 0.212368

iteration 850 / 1000: loss 0.243171

iteration 860 / 1000: loss 0.239865

iteration 870 / 1000: loss 0.232572

iteration 880 / 1000: loss 0.229844

iteration 890 / 1000: loss 0.219853

iteration 900 / 1000: loss 0.221547

iteration 910 / 1000: loss 0.220132

iteration 920 / 1000: loss 0.231083

iteration 930 / 1000: loss 0.219633

iteration 940 / 1000: loss 0.229910

iteration 950 / 1000: loss 0.214044

iteration 960 / 1000: loss 0.233360

iteration 970 / 1000: loss 0.228380

iteration 980 / 1000: loss 0.223965

iteration 990 / 1000: loss 0.220600

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.686368

iteration 10 / 1000: loss 0.357341

iteration 20 / 1000: loss 0.318981

iteration 30 / 1000: loss 0.303229

iteration 40 / 1000: loss 0.293290

iteration 50 / 1000: loss 0.292153

iteration 60 / 1000: loss 0.275950

iteration 70 / 1000: loss 0.269409

iteration 80 / 1000: loss 0.261372

iteration 90 / 1000: loss 0.266059

iteration 100 / 1000: loss 0.247912

iteration 110 / 1000: loss 0.251767

iteration 120 / 1000: loss 0.239528

iteration 130 / 1000: loss 0.256071

iteration 140 / 1000: loss 0.233818

iteration 150 / 1000: loss 0.240627

iteration 160 / 1000: loss 0.251953

iteration 170 / 1000: loss 0.253709

iteration 180 / 1000: loss 0.242456

iteration 190 / 1000: loss 0.241249

iteration 200 / 1000: loss 0.238237

iteration 210 / 1000: loss 0.232752

iteration 220 / 1000: loss 0.238111

iteration 230 / 1000: loss 0.247885

iteration 240 / 1000: loss 0.236312

iteration 250 / 1000: loss 0.233510

iteration 260 / 1000: loss 0.238776

iteration 270 / 1000: loss 0.239301

iteration 280 / 1000: loss 0.244355

iteration 290 / 1000: loss 0.236337

iteration 300 / 1000: loss 0.233937

iteration 310 / 1000: loss 0.228712

iteration 320 / 1000: loss 0.239237

iteration 330 / 1000: loss 0.237543

iteration 340 / 1000: loss 0.231561

iteration 350 / 1000: loss 0.232077

iteration 360 / 1000: loss 0.229169

iteration 370 / 1000: loss 0.233267

iteration 380 / 1000: loss 0.231036

iteration 390 / 1000: loss 0.232650

iteration 400 / 1000: loss 0.233000

iteration 410 / 1000: loss 0.220220

iteration 420 / 1000: loss 0.221883

iteration 430 / 1000: loss 0.237341

iteration 440 / 1000: loss 0.224529

iteration 450 / 1000: loss 0.234006

iteration 460 / 1000: loss 0.230238

iteration 470 / 1000: loss 0.234494

iteration 480 / 1000: loss 0.235248

iteration 490 / 1000: loss 0.230099

iteration 500 / 1000: loss 0.223887

iteration 510 / 1000: loss 0.231949

iteration 520 / 1000: loss 0.211333

iteration 530 / 1000: loss 0.228644

iteration 540 / 1000: loss 0.238444

iteration 550 / 1000: loss 0.210429

iteration 560 / 1000: loss 0.215501

iteration 570 / 1000: loss 0.233994

iteration 580 / 1000: loss 0.219790

iteration 590 / 1000: loss 0.226758

iteration 600 / 1000: loss 0.212030

iteration 610 / 1000: loss 0.227099

iteration 620 / 1000: loss 0.230506

iteration 630 / 1000: loss 0.226286

iteration 640 / 1000: loss 0.234059

iteration 650 / 1000: loss 0.228732

iteration 660 / 1000: loss 0.219503

iteration 670 / 1000: loss 0.221989

iteration 680 / 1000: loss 0.224399

iteration 690 / 1000: loss 0.218559

iteration 700 / 1000: loss 0.228974

iteration 710 / 1000: loss 0.219922

iteration 720 / 1000: loss 0.226182

iteration 730 / 1000: loss 0.225182

iteration 740 / 1000: loss 0.230519

iteration 750 / 1000: loss 0.216343

iteration 760 / 1000: loss 0.205160

iteration 770 / 1000: loss 0.228262

iteration 780 / 1000: loss 0.230450

iteration 790 / 1000: loss 0.222103

iteration 800 / 1000: loss 0.215900

iteration 810 / 1000: loss 0.215989

iteration 820 / 1000: loss 0.221445

iteration 830 / 1000: loss 0.207894

iteration 840 / 1000: loss 0.211124

iteration 850 / 1000: loss 0.220153

iteration 860 / 1000: loss 0.210921

iteration 870 / 1000: loss 0.218330

iteration 880 / 1000: loss 0.222510

iteration 890 / 1000: loss 0.219810

iteration 900 / 1000: loss 0.222990

iteration 910 / 1000: loss 0.229976

iteration 920 / 1000: loss 0.213337

iteration 930 / 1000: loss 0.214479

iteration 940 / 1000: loss 0.205117

iteration 950 / 1000: loss 0.219531

iteration 960 / 1000: loss 0.222700

iteration 970 / 1000: loss 0.239009

iteration 980 / 1000: loss 0.231653

iteration 990 / 1000: loss 0.221841

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.692325

iteration 10 / 1000: loss 0.348990

iteration 20 / 1000: loss 0.320517

iteration 30 / 1000: loss 0.306642

iteration 40 / 1000: loss 0.288931

iteration 50 / 1000: loss 0.282360

iteration 60 / 1000: loss 0.266180

iteration 70 / 1000: loss 0.275369

iteration 80 / 1000: loss 0.264471

iteration 90 / 1000: loss 0.253551

iteration 100 / 1000: loss 0.247588

iteration 110 / 1000: loss 0.268073

iteration 120 / 1000: loss 0.247568

iteration 130 / 1000: loss 0.253782

iteration 140 / 1000: loss 0.248468

iteration 150 / 1000: loss 0.252858

iteration 160 / 1000: loss 0.243900

iteration 170 / 1000: loss 0.256569

iteration 180 / 1000: loss 0.236897

iteration 190 / 1000: loss 0.246429

iteration 200 / 1000: loss 0.237611

iteration 210 / 1000: loss 0.238560

iteration 220 / 1000: loss 0.242149

iteration 230 / 1000: loss 0.240667

iteration 240 / 1000: loss 0.240468

iteration 250 / 1000: loss 0.241374

iteration 260 / 1000: loss 0.227822

iteration 270 / 1000: loss 0.235546

iteration 280 / 1000: loss 0.241253

iteration 290 / 1000: loss 0.233187

iteration 300 / 1000: loss 0.229610

iteration 310 / 1000: loss 0.246391

iteration 320 / 1000: loss 0.239032

iteration 330 / 1000: loss 0.224034

iteration 340 / 1000: loss 0.230667

iteration 350 / 1000: loss 0.241419

iteration 360 / 1000: loss 0.219488

iteration 370 / 1000: loss 0.236607

iteration 380 / 1000: loss 0.239768

iteration 390 / 1000: loss 0.225350

iteration 400 / 1000: loss 0.239179

iteration 410 / 1000: loss 0.241943

iteration 420 / 1000: loss 0.242744

iteration 430 / 1000: loss 0.229928

iteration 440 / 1000: loss 0.231840

iteration 450 / 1000: loss 0.244461

iteration 460 / 1000: loss 0.247348

iteration 470 / 1000: loss 0.233191

iteration 480 / 1000: loss 0.227117

iteration 490 / 1000: loss 0.246858

iteration 500 / 1000: loss 0.214954

iteration 510 / 1000: loss 0.236938

iteration 520 / 1000: loss 0.235639

iteration 530 / 1000: loss 0.214323

iteration 540 / 1000: loss 0.219438

iteration 550 / 1000: loss 0.219027

iteration 560 / 1000: loss 0.234369

iteration 570 / 1000: loss 0.228973

iteration 580 / 1000: loss 0.209489

iteration 590 / 1000: loss 0.224964

iteration 600 / 1000: loss 0.221997

iteration 610 / 1000: loss 0.217666

iteration 620 / 1000: loss 0.216852

iteration 630 / 1000: loss 0.228495

iteration 640 / 1000: loss 0.232136

iteration 650 / 1000: loss 0.226374

iteration 660 / 1000: loss 0.232476

iteration 670 / 1000: loss 0.227136

iteration 680 / 1000: loss 0.216802

iteration 690 / 1000: loss 0.230402

iteration 700 / 1000: loss 0.221749

iteration 710 / 1000: loss 0.234945

iteration 720 / 1000: loss 0.224571

iteration 730 / 1000: loss 0.229933

iteration 740 / 1000: loss 0.238410

iteration 750 / 1000: loss 0.232855

iteration 760 / 1000: loss 0.217409

iteration 770 / 1000: loss 0.219813

iteration 780 / 1000: loss 0.230351

iteration 790 / 1000: loss 0.230363

iteration 800 / 1000: loss 0.219990

iteration 810 / 1000: loss 0.224659

iteration 820 / 1000: loss 0.216315

iteration 830 / 1000: loss 0.223469

iteration 840 / 1000: loss 0.210466

iteration 850 / 1000: loss 0.206708

iteration 860 / 1000: loss 0.227760

iteration 870 / 1000: loss 0.225196

iteration 880 / 1000: loss 0.228807

iteration 890 / 1000: loss 0.224792

iteration 900 / 1000: loss 0.211622

iteration 910 / 1000: loss 0.224940

iteration 920 / 1000: loss 0.227662

iteration 930 / 1000: loss 0.213802

iteration 940 / 1000: loss 0.225000

iteration 950 / 1000: loss 0.219497

iteration 960 / 1000: loss 0.203011

iteration 970 / 1000: loss 0.216575

iteration 980 / 1000: loss 0.213788

iteration 990 / 1000: loss 0.229654

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.695570

iteration 10 / 1000: loss 0.358489

iteration 20 / 1000: loss 0.324250

iteration 30 / 1000: loss 0.309067

iteration 40 / 1000: loss 0.300651

iteration 50 / 1000: loss 0.283480

iteration 60 / 1000: loss 0.266650

iteration 70 / 1000: loss 0.284909

iteration 80 / 1000: loss 0.257433

iteration 90 / 1000: loss 0.267413

iteration 100 / 1000: loss 0.262605

iteration 110 / 1000: loss 0.256809

iteration 120 / 1000: loss 0.247760

iteration 130 / 1000: loss 0.262222

iteration 140 / 1000: loss 0.241906

iteration 150 / 1000: loss 0.242044

iteration 160 / 1000: loss 0.252166

iteration 170 / 1000: loss 0.237245

iteration 180 / 1000: loss 0.237634

iteration 190 / 1000: loss 0.243252

iteration 200 / 1000: loss 0.249584

iteration 210 / 1000: loss 0.254432

iteration 220 / 1000: loss 0.234611

iteration 230 / 1000: loss 0.234032

iteration 240 / 1000: loss 0.244641

iteration 250 / 1000: loss 0.247834

iteration 260 / 1000: loss 0.240780

iteration 270 / 1000: loss 0.238255

iteration 280 / 1000: loss 0.245307

iteration 290 / 1000: loss 0.238766

iteration 300 / 1000: loss 0.236245

iteration 310 / 1000: loss 0.226554

iteration 320 / 1000: loss 0.230845

iteration 330 / 1000: loss 0.248675

iteration 340 / 1000: loss 0.245221

iteration 350 / 1000: loss 0.239994

iteration 360 / 1000: loss 0.227640

iteration 370 / 1000: loss 0.230542

iteration 380 / 1000: loss 0.230962

iteration 390 / 1000: loss 0.240206

iteration 400 / 1000: loss 0.233180

iteration 410 / 1000: loss 0.235403

iteration 420 / 1000: loss 0.239504

iteration 430 / 1000: loss 0.234912

iteration 440 / 1000: loss 0.239808

iteration 450 / 1000: loss 0.231414

iteration 460 / 1000: loss 0.228698

iteration 470 / 1000: loss 0.227453

iteration 480 / 1000: loss 0.240496

iteration 490 / 1000: loss 0.223695

iteration 500 / 1000: loss 0.225473

iteration 510 / 1000: loss 0.227877

iteration 520 / 1000: loss 0.211264

iteration 530 / 1000: loss 0.235007

iteration 540 / 1000: loss 0.232936

iteration 550 / 1000: loss 0.227349

iteration 560 / 1000: loss 0.221485

iteration 570 / 1000: loss 0.217084

iteration 580 / 1000: loss 0.234745

iteration 590 / 1000: loss 0.230357

iteration 600 / 1000: loss 0.224389

iteration 610 / 1000: loss 0.238229

iteration 620 / 1000: loss 0.229789

iteration 630 / 1000: loss 0.217311

iteration 640 / 1000: loss 0.232774

iteration 650 / 1000: loss 0.228463

iteration 660 / 1000: loss 0.234703

iteration 670 / 1000: loss 0.230520

iteration 680 / 1000: loss 0.229772

iteration 690 / 1000: loss 0.230936

iteration 700 / 1000: loss 0.233094

iteration 710 / 1000: loss 0.226290

iteration 720 / 1000: loss 0.221242

iteration 730 / 1000: loss 0.232349

iteration 740 / 1000: loss 0.227636

iteration 750 / 1000: loss 0.224199

iteration 760 / 1000: loss 0.228650

iteration 770 / 1000: loss 0.231615

iteration 780 / 1000: loss 0.237092

iteration 790 / 1000: loss 0.222374

iteration 800 / 1000: loss 0.229114

iteration 810 / 1000: loss 0.217832

iteration 820 / 1000: loss 0.229883

iteration 830 / 1000: loss 0.225539

iteration 840 / 1000: loss 0.238435

iteration 850 / 1000: loss 0.222694

iteration 860 / 1000: loss 0.234094

iteration 870 / 1000: loss 0.232754

iteration 880 / 1000: loss 0.217546

iteration 890 / 1000: loss 0.214160

iteration 900 / 1000: loss 0.214143

iteration 910 / 1000: loss 0.237570

iteration 920 / 1000: loss 0.203175

iteration 930 / 1000: loss 0.220957

iteration 940 / 1000: loss 0.225102

iteration 950 / 1000: loss 0.224513

iteration 960 / 1000: loss 0.228420

iteration 970 / 1000: loss 0.218036

iteration 980 / 1000: loss 0.217627

iteration 990 / 1000: loss 0.228563

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.692300

iteration 10 / 1000: loss 0.363759

iteration 20 / 1000: loss 0.321134

iteration 30 / 1000: loss 0.303553

iteration 40 / 1000: loss 0.279851

iteration 50 / 1000: loss 0.273401

iteration 60 / 1000: loss 0.276503

iteration 70 / 1000: loss 0.263057

iteration 80 / 1000: loss 0.268349

iteration 90 / 1000: loss 0.262398

iteration 100 / 1000: loss 0.243155

iteration 110 / 1000: loss 0.259696

iteration 120 / 1000: loss 0.252532

iteration 130 / 1000: loss 0.247704

iteration 140 / 1000: loss 0.246133

iteration 150 / 1000: loss 0.242410

iteration 160 / 1000: loss 0.259494

iteration 170 / 1000: loss 0.240324

iteration 180 / 1000: loss 0.252781

iteration 190 / 1000: loss 0.245092

iteration 200 / 1000: loss 0.247156

iteration 210 / 1000: loss 0.243832

iteration 220 / 1000: loss 0.228424

iteration 230 / 1000: loss 0.233261

iteration 240 / 1000: loss 0.237502

iteration 250 / 1000: loss 0.236427

iteration 260 / 1000: loss 0.242419

iteration 270 / 1000: loss 0.233415

iteration 280 / 1000: loss 0.235680

iteration 290 / 1000: loss 0.226453

iteration 300 / 1000: loss 0.236692

iteration 310 / 1000: loss 0.239065

iteration 320 / 1000: loss 0.226070

iteration 330 / 1000: loss 0.229819

iteration 340 / 1000: loss 0.232613

iteration 350 / 1000: loss 0.237716

iteration 360 / 1000: loss 0.234173

iteration 370 / 1000: loss 0.221916

iteration 380 / 1000: loss 0.217280

iteration 390 / 1000: loss 0.230083

iteration 400 / 1000: loss 0.232991

iteration 410 / 1000: loss 0.229553

iteration 420 / 1000: loss 0.226135

iteration 430 / 1000: loss 0.230722

iteration 440 / 1000: loss 0.238430

iteration 450 / 1000: loss 0.233023

iteration 460 / 1000: loss 0.229875

iteration 470 / 1000: loss 0.232320

iteration 480 / 1000: loss 0.210167

iteration 490 / 1000: loss 0.215861

iteration 500 / 1000: loss 0.230833

iteration 510 / 1000: loss 0.230072

iteration 520 / 1000: loss 0.220003

iteration 530 / 1000: loss 0.224501

iteration 540 / 1000: loss 0.227041

iteration 550 / 1000: loss 0.227476

iteration 560 / 1000: loss 0.225907

iteration 570 / 1000: loss 0.240346

iteration 580 / 1000: loss 0.224364

iteration 590 / 1000: loss 0.208407

iteration 600 / 1000: loss 0.220586

iteration 610 / 1000: loss 0.221253

iteration 620 / 1000: loss 0.211099

iteration 630 / 1000: loss 0.222597

iteration 640 / 1000: loss 0.220468

iteration 650 / 1000: loss 0.226549

iteration 660 / 1000: loss 0.214090

iteration 670 / 1000: loss 0.227821

iteration 680 / 1000: loss 0.222238

iteration 690 / 1000: loss 0.213874

iteration 700 / 1000: loss 0.215205

iteration 710 / 1000: loss 0.211720

iteration 720 / 1000: loss 0.221692

iteration 730 / 1000: loss 0.217034

iteration 740 / 1000: loss 0.216168

iteration 750 / 1000: loss 0.231321

iteration 760 / 1000: loss 0.215882

iteration 770 / 1000: loss 0.226286

iteration 780 / 1000: loss 0.216912

iteration 790 / 1000: loss 0.215957

iteration 800 / 1000: loss 0.219558

iteration 810 / 1000: loss 0.219957

iteration 820 / 1000: loss 0.204205

iteration 830 / 1000: loss 0.209893

iteration 840 / 1000: loss 0.222102

iteration 850 / 1000: loss 0.209545

iteration 860 / 1000: loss 0.222215

iteration 870 / 1000: loss 0.222040

iteration 880 / 1000: loss 0.211369

iteration 890 / 1000: loss 0.211190

iteration 900 / 1000: loss 0.215895

iteration 910 / 1000: loss 0.223128

iteration 920 / 1000: loss 0.225074

iteration 930 / 1000: loss 0.213946

iteration 940 / 1000: loss 0.210757

iteration 950 / 1000: loss 0.210781

iteration 960 / 1000: loss 0.233870

iteration 970 / 1000: loss 0.216172

iteration 980 / 1000: loss 0.219639

iteration 990 / 1000: loss 0.225270

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.686539

iteration 10 / 1000: loss 0.370893

iteration 20 / 1000: loss 0.317462

iteration 30 / 1000: loss 0.307418

iteration 40 / 1000: loss 0.276782

iteration 50 / 1000: loss 0.285975

iteration 60 / 1000: loss 0.281196

iteration 70 / 1000: loss 0.267959

iteration 80 / 1000: loss 0.261921

iteration 90 / 1000: loss 0.272718

iteration 100 / 1000: loss 0.262308

iteration 110 / 1000: loss 0.260984

iteration 120 / 1000: loss 0.243462

iteration 130 / 1000: loss 0.232496

iteration 140 / 1000: loss 0.243209

iteration 150 / 1000: loss 0.245624

iteration 160 / 1000: loss 0.255214

iteration 170 / 1000: loss 0.246456

iteration 180 / 1000: loss 0.241859

iteration 190 / 1000: loss 0.242453

iteration 200 / 1000: loss 0.235716

iteration 210 / 1000: loss 0.234309

iteration 220 / 1000: loss 0.238089

iteration 230 / 1000: loss 0.237669

iteration 240 / 1000: loss 0.237358

iteration 250 / 1000: loss 0.244546

iteration 260 / 1000: loss 0.246967

iteration 270 / 1000: loss 0.247141

iteration 280 / 1000: loss 0.234510

iteration 290 / 1000: loss 0.240919

iteration 300 / 1000: loss 0.236771

iteration 310 / 1000: loss 0.242945

iteration 320 / 1000: loss 0.233953

iteration 330 / 1000: loss 0.235631

iteration 340 / 1000: loss 0.240317

iteration 350 / 1000: loss 0.239660

iteration 360 / 1000: loss 0.226390

iteration 370 / 1000: loss 0.234067

iteration 380 / 1000: loss 0.241559

iteration 390 / 1000: loss 0.240896

iteration 400 / 1000: loss 0.227553

iteration 410 / 1000: loss 0.232967

iteration 420 / 1000: loss 0.244276

iteration 430 / 1000: loss 0.233991

iteration 440 / 1000: loss 0.224573

iteration 450 / 1000: loss 0.243582

iteration 460 / 1000: loss 0.233078

iteration 470 / 1000: loss 0.222379

iteration 480 / 1000: loss 0.241229

iteration 490 / 1000: loss 0.228987

iteration 500 / 1000: loss 0.238099

iteration 510 / 1000: loss 0.228300

iteration 520 / 1000: loss 0.225139

iteration 530 / 1000: loss 0.225490

iteration 540 / 1000: loss 0.220073

iteration 550 / 1000: loss 0.223593

iteration 560 / 1000: loss 0.229766

iteration 570 / 1000: loss 0.229151

iteration 580 / 1000: loss 0.239957

iteration 590 / 1000: loss 0.219428

iteration 600 / 1000: loss 0.224717

iteration 610 / 1000: loss 0.215571

iteration 620 / 1000: loss 0.219876

iteration 630 / 1000: loss 0.214830

iteration 640 / 1000: loss 0.221080

iteration 650 / 1000: loss 0.232467

iteration 660 / 1000: loss 0.227414

iteration 670 / 1000: loss 0.221418

iteration 680 / 1000: loss 0.234883

iteration 690 / 1000: loss 0.210510

iteration 700 / 1000: loss 0.238471

iteration 710 / 1000: loss 0.219434

iteration 720 / 1000: loss 0.242129

iteration 730 / 1000: loss 0.238481

iteration 740 / 1000: loss 0.242006

iteration 750 / 1000: loss 0.219919

iteration 760 / 1000: loss 0.229061

iteration 770 / 1000: loss 0.211147

iteration 780 / 1000: loss 0.208960

iteration 790 / 1000: loss 0.219660

iteration 800 / 1000: loss 0.226121

iteration 810 / 1000: loss 0.222475

iteration 820 / 1000: loss 0.229800

iteration 830 / 1000: loss 0.222815

iteration 840 / 1000: loss 0.231926

iteration 850 / 1000: loss 0.234573

iteration 860 / 1000: loss 0.225939

iteration 870 / 1000: loss 0.219069

iteration 880 / 1000: loss 0.228357

iteration 890 / 1000: loss 0.225287

iteration 900 / 1000: loss 0.232924

iteration 910 / 1000: loss 0.212157

iteration 920 / 1000: loss 0.235149

iteration 930 / 1000: loss 0.228447

iteration 940 / 1000: loss 0.203405

iteration 950 / 1000: loss 0.223894

iteration 960 / 1000: loss 0.226521

iteration 970 / 1000: loss 0.223319

iteration 980 / 1000: loss 0.224591

iteration 990 / 1000: loss 0.219805

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 1000: loss 0.690613

iteration 10 / 1000: loss 0.351338

iteration 20 / 1000: loss 0.322419

iteration 30 / 1000: loss 0.299471

iteration 40 / 1000: loss 0.285467

iteration 50 / 1000: loss 0.277947

iteration 60 / 1000: loss 0.266197

iteration 70 / 1000: loss 0.279122

iteration 80 / 1000: loss 0.261543

iteration 90 / 1000: loss 0.255722

iteration 100 / 1000: loss 0.258051

iteration 110 / 1000: loss 0.269853

iteration 120 / 1000: loss 0.242061

iteration 130 / 1000: loss 0.255182

iteration 140 / 1000: loss 0.248185

iteration 150 / 1000: loss 0.264188

iteration 160 / 1000: loss 0.247811

iteration 170 / 1000: loss 0.249105

iteration 180 / 1000: loss 0.235434

iteration 190 / 1000: loss 0.250608

iteration 200 / 1000: loss 0.258968

iteration 210 / 1000: loss 0.245373

iteration 220 / 1000: loss 0.249339

iteration 230 / 1000: loss 0.241501

iteration 240 / 1000: loss 0.236172

iteration 250 / 1000: loss 0.229713

iteration 260 / 1000: loss 0.233922

iteration 270 / 1000: loss 0.246254

iteration 280 / 1000: loss 0.232121

iteration 290 / 1000: loss 0.225480

iteration 300 / 1000: loss 0.259683

iteration 310 / 1000: loss 0.235217

iteration 320 / 1000: loss 0.240050

iteration 330 / 1000: loss 0.238971

iteration 340 / 1000: loss 0.235066

iteration 350 / 1000: loss 0.226929

iteration 360 / 1000: loss 0.237286

iteration 370 / 1000: loss 0.233348

iteration 380 / 1000: loss 0.236615

iteration 390 / 1000: loss 0.232381

iteration 400 / 1000: loss 0.225922

iteration 410 / 1000: loss 0.233079

iteration 420 / 1000: loss 0.234174

iteration 430 / 1000: loss 0.225896

iteration 440 / 1000: loss 0.234972

iteration 450 / 1000: loss 0.233061

iteration 460 / 1000: loss 0.233806

iteration 470 / 1000: loss 0.235120

iteration 480 / 1000: loss 0.224871

iteration 490 / 1000: loss 0.236963

iteration 500 / 1000: loss 0.214821

iteration 510 / 1000: loss 0.221394

iteration 520 / 1000: loss 0.229936

iteration 530 / 1000: loss 0.227716

iteration 540 / 1000: loss 0.228005

iteration 550 / 1000: loss 0.231032

iteration 560 / 1000: loss 0.236600

iteration 570 / 1000: loss 0.217327

iteration 580 / 1000: loss 0.233030

iteration 590 / 1000: loss 0.244304

iteration 600 / 1000: loss 0.213860

iteration 610 / 1000: loss 0.232491

iteration 620 / 1000: loss 0.217764

iteration 630 / 1000: loss 0.216813

iteration 640 / 1000: loss 0.219536

iteration 650 / 1000: loss 0.239934

iteration 660 / 1000: loss 0.239394

iteration 670 / 1000: loss 0.223490

iteration 680 / 1000: loss 0.220620

iteration 690 / 1000: loss 0.230945

iteration 700 / 1000: loss 0.227732

iteration 710 / 1000: loss 0.224032

iteration 720 / 1000: loss 0.247752

iteration 730 / 1000: loss 0.234144

iteration 740 / 1000: loss 0.231850

iteration 750 / 1000: loss 0.226566

iteration 760 / 1000: loss 0.228358

iteration 770 / 1000: loss 0.238045

iteration 780 / 1000: loss 0.216584

iteration 790 / 1000: loss 0.220729

iteration 800 / 1000: loss 0.231510

iteration 810 / 1000: loss 0.212132

iteration 820 / 1000: loss 0.225368

iteration 830 / 1000: loss 0.236915

iteration 840 / 1000: loss 0.222729

iteration 850 / 1000: loss 0.231259

iteration 860 / 1000: loss 0.217491

iteration 870 / 1000: loss 0.236536

iteration 880 / 1000: loss 0.215961

iteration 890 / 1000: loss 0.222086

iteration 900 / 1000: loss 0.214021

iteration 910 / 1000: loss 0.219459

iteration 920 / 1000: loss 0.227918

iteration 930 / 1000: loss 0.224764

iteration 940 / 1000: loss 0.227398

iteration 950 / 1000: loss 0.230737

iteration 960 / 1000: loss 0.224591

iteration 970 / 1000: loss 0.236471

iteration 980 / 1000: loss 0.226879

iteration 990 / 1000: loss 0.211791

[[2619, 168], [165, 1648]]

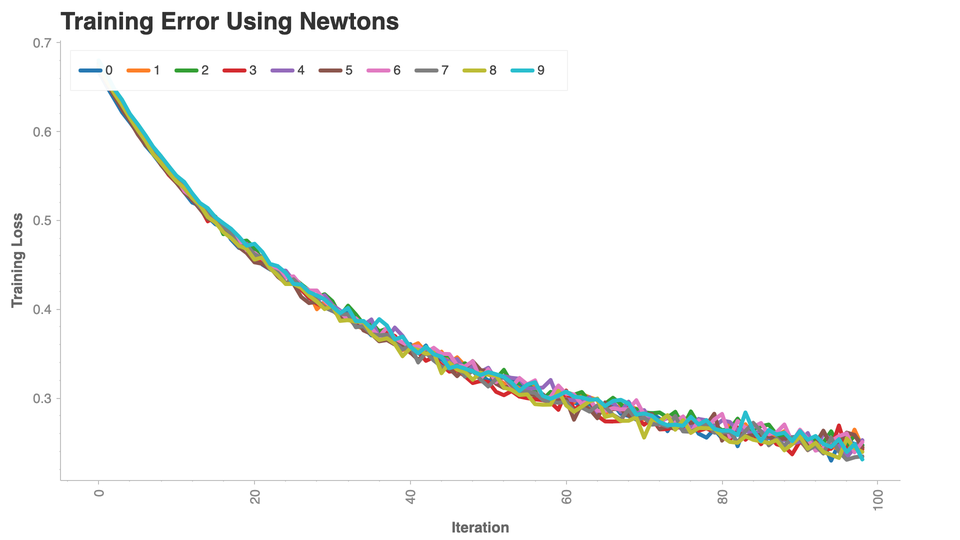

all_losses_df = np.array(all_losses)

all_losses_df = all_losses_df.reshape(len(all_losses_df), len(all_losses_df[0]))

all_losses_df = pd.DataFrame(all_losses_df).T

all_losses_df["iter"] = all_losses_df.index

all_losses_df = all_losses_df.melt(id_vars=["iter"])

import chartify

ch = chartify.Chart(blank_labels=True)

ch.set_title("Training Error For Logistic Regression Using Gradient Descent")

ch.plot.line(

data_frame=all_losses_df,

color_column="variable",

x_column='iter',

y_column="value")

ch.axes.set_yaxis_label("Training Loss")

ch.axes.set_xaxis_label("Iteration")

ch.axes.set_xaxis_tick_orientation("vertical")

ch.show('png')

y_test_1_y_pred_1 = []

y_test_0_y_pred_0 = []

y_test_1_y_pred_0 = []

y_test_0_y_pred_1 = []

all_losses = []

kf = KFold(n_splits=10, shuffle=True)

for train_index, test_index in kf.split(X_all):

print("------------------------------------ NEW TEST ------------------------------------")

X_train, X_test = X_all[train_index], X_all[test_index]

y_train, y_test = y_all[train_index], y_all[test_index]

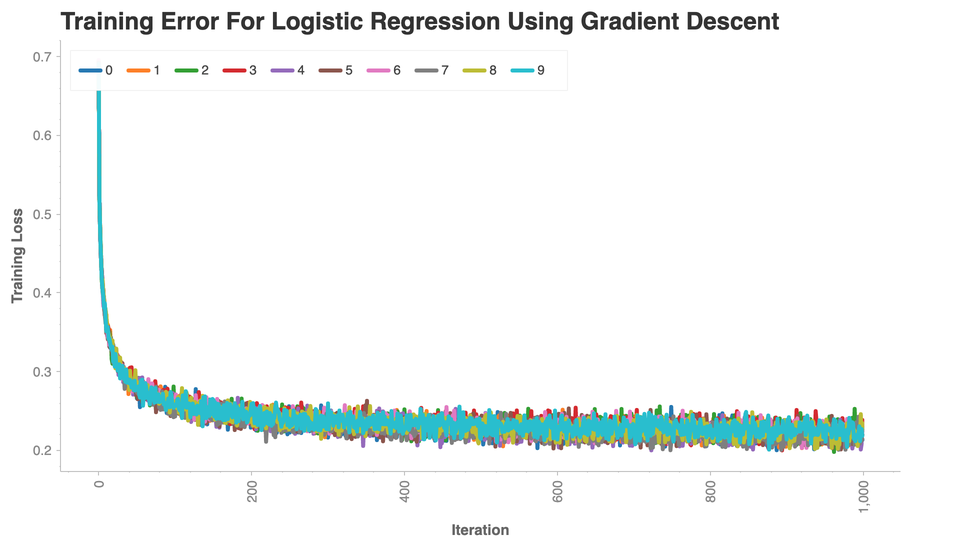

cl = LogisticRegression(method="newton")

loss_data = cl.train(X_train, y_train, learning_rate=0.03, batch_size=4600, training_iterations=100)

loss_data = loss_data[1:] # First loss point is incorrect as old weights are initialized as random.

y_pred = cl.predict(X_test)

all_losses.append(loss_data)

joined = pd.concat([pd.DataFrame(y_pred, columns=["y_pred"]), pd.DataFrame(y_test, columns=["y_test"])], axis=1)

joined["correct"] = joined["y_pred"] == joined["y_test"]

y_test_1_y_pred_1.append(joined.loc[(joined["correct"] == True) & (joined["y_test"] == 1),"correct"].count()) # /len(y_test)

y_test_0_y_pred_0.append(joined.loc[(joined["correct"] == True) & (joined["y_test"] == 0),"correct"].count()) # /len(y_test)

y_test_1_y_pred_0.append(joined.loc[(joined["correct"] == False) & (joined["y_test"] == 1),"correct"].count()) # /len(y_test)

y_test_0_y_pred_1.append(joined.loc[(joined["correct"] == False) & (joined["y_test"] == 0),"correct"].count()) # /len(y_test)

y_test_0_y_pred_0_avg = sum(y_test_0_y_pred_0)#/len(y_test_0_y_pred_0)

y_test_0_y_pred_1_avg = sum(y_test_0_y_pred_1)#/len(y_test_0_y_pred_1)

y_test_1_y_pred_0_avg = sum(y_test_1_y_pred_0)#/len(y_test_1_y_pred_0)

y_test_1_y_pred_1_avg = sum(y_test_1_y_pred_1)#/len(y_test_1_y_pred_1)

[[y_test_0_y_pred_0_avg, y_test_0_y_pred_1_avg], [y_test_1_y_pred_0_avg, y_test_1_y_pred_1_avg]]

all_losses_df = np.array(all_losses)

all_losses_df = all_losses_df.reshape(len(all_losses_df), len(all_losses_df[0]))

all_losses_df = pd.DataFrame(all_losses_df).T

all_losses_df["iter"] = all_losses_df.index

all_losses_df = all_losses_df.melt(id_vars=["iter"])

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 100: loss 7.980334

iteration 10 / 100: loss 0.554379

iteration 20 / 100: loss 0.466344

iteration 30 / 100: loss 0.407950

iteration 40 / 100: loss 0.361385

iteration 50 / 100: loss 0.325229

iteration 60 / 100: loss 0.296917

iteration 70 / 100: loss 0.285433

iteration 80 / 100: loss 0.264095

iteration 90 / 100: loss 0.249518

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 100: loss -42.210418

iteration 10 / 100: loss 0.560659

iteration 20 / 100: loss 0.467110

iteration 30 / 100: loss 0.399625

iteration 40 / 100: loss 0.367757

iteration 50 / 100: loss 0.330008

iteration 60 / 100: loss 0.311408

iteration 70 / 100: loss 0.292069

iteration 80 / 100: loss 0.255831

iteration 90 / 100: loss 0.260549

------------------------------------ NEW TEST ------------------------------------

iteration 0 / 100: loss -31.696240

iteration 10 / 100: loss 0.554131

iteration 20 / 100: loss 0.466298

iteration 30 / 100: loss 0.411266

iteration 40 / 100: loss 0.352328

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

/var/folders/w3/8cmbpqt17zgg1lcgbdw84r6c0000gn/T/ipykernel_6896/168532853.py in <module>

15 cl = LogisticRegression(method="newton")

16

---> 17 loss_data = cl.train(X_train, y_train, learning_rate=0.03, batch_size=4600, training_iterations=100)

18 loss_data = loss_data[1:] # First loss point is incorrect as old weights are initialized as random.

19 y_pred = cl.predict(X_test)

/var/folders/w3/8cmbpqt17zgg1lcgbdw84r6c0000gn/T/ipykernel_6896/3123555176.py in train(self, X_train, y_train, batch_size, learning_rate, training_iterations, reg)

32 X_batch = X_train

33 y_batch = y_train

---> 34 loss, dW = self.loss(X_batch, y_batch, regularization=reg)

35 all_loss.append(loss)

36 self.old_weights = self.weights

/var/folders/w3/8cmbpqt17zgg1lcgbdw84r6c0000gn/T/ipykernel_6896/3123555176.py in loss(self, X_train, y_train, regularization)

84 return logistic_regression_loss_vectorized(self.weights, X_train, y_train, regularization)

85 elif self.method == "newton":

---> 86 return newtons_method_loss(self.weights, self.old_weights, X_train, y_train, regularization)

87

88

/var/folders/w3/8cmbpqt17zgg1lcgbdw84r6c0000gn/T/ipykernel_6896/1435915262.py in newtons_method_loss(W, W_old, X, y, reg)

45 D = D.reshape(len(D))

46 D = np.diag(D)

---> 47 H = X.T @ D @ X

48 weight_change = (W - W_old)

49 grad_term = weight_change.T @ dW

KeyboardInterrupt:

[[y_test_0_y_pred_0_avg, y_test_0_y_pred_1_avg], [y_test_1_y_pred_0_avg, y_test_1_y_pred_1_avg]]

[[2647, 140], [258, 1555]]

import chartify

ch = chartify.Chart(blank_labels=True)

ch.set_title("Training Error Using Newtons")

ch.plot.line(

data_frame=all_losses_df,

color_column="variable",

x_column='iter',

y_column="value")

ch.axes.set_yaxis_label("Training Loss")

ch.axes.set_xaxis_label("Iteration")

ch.axes.set_xaxis_tick_orientation("vertical")

ch.show('png')